There is more good news for solutions built using AWS VPC. AWS recently announced the availability of Internal Elastic Load Balancer within Virtual Private Cloud (VPC). This has been a request by the AWS community for quite a while and AWS always listens to the community and prioritizes product offerings accordingly.

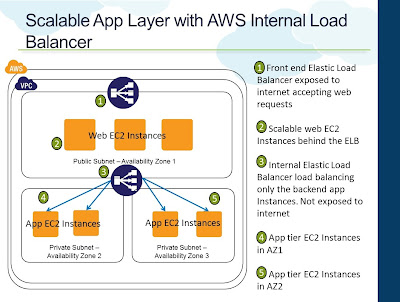

Amazon's Elastic Load Balancing (ELB) is the internet exposed load balancing service. It can be used to load balance web servers and the web server layer can be scaled behind ELB. Usually the scaling requirements doesn't end with web layer. Often the subsequent backend layers such as application tier, database needs to scale as well. When you have a dynamic web layer and a dynamic application layer, architects will obviously have the challenge of how the dynamic servers identify each other. The solution would be to introduce a "app load balancer" in between which can load balance the backend application tier Instances. The web tier Instances will be configured to talk to the "app load balancer". This way the app tier can also be scaled behind the "app load balancer" while web tier continues to connect to the load balancer.

But the architecture has the following drawbacks:

AWS Internal Elastic Load Balancer (i am naming it iELB :) ) is the solution for this requirement. We can place iELB between the web and application tier and bring in scalability for the application tier and leave the rest of architecture concerns to AWS. iELB is available only within VPC and hence is useful for infrastructure that is residing within VPC.

Apart from using iELB for scaling and load balancing the application tier, I found it useful for another use case - Scaling Database

Most of the web applications are read intensive in nature. The resultant database queries will primarily be read transactions. To achieve better database throughput we can separate out the write and read transactions to different database Instances. If you are using Amazon RDS, you can launch RDS read replicas and use them to serve the read transactions. To scale the read operations we can launch multiple copies of read replicas according to the load condition. But there aren't good JDBC drivers that can load balance between multiple read replica Instances. Even if we are to use the drivers' capability to load balance between multiple DB Instances, it would be difficult to add and remove Instances dynamically. We will have to push configuration changes to the application tier whenever we scale out or scale in the read replicas. This can be addressed by utilizing iELB as the load balancer between the application tier and database. The resultant architecture will look like below.

Note: As of today, one can add only EC2 Instances behind the iELB. Whenever we create a read replica we do not know the Instance Id of that read replica. Rather we know only the end point URL. Hence this architecture is currently not implementable as such.

Here are some of the highlights of this architecture:

Security

Scalability

High Availability

Amazon's Elastic Load Balancing (ELB) is the internet exposed load balancing service. It can be used to load balance web servers and the web server layer can be scaled behind ELB. Usually the scaling requirements doesn't end with web layer. Often the subsequent backend layers such as application tier, database needs to scale as well. When you have a dynamic web layer and a dynamic application layer, architects will obviously have the challenge of how the dynamic servers identify each other. The solution would be to introduce a "app load balancer" in between which can load balance the backend application tier Instances. The web tier Instances will be configured to talk to the "app load balancer". This way the app tier can also be scaled behind the "app load balancer" while web tier continues to connect to the load balancer.

But the architecture has the following drawbacks:

- Single Point of Failure - the "app load balancer" becomes the single point of failure in the entire architecture. If that Instance becomes unavailable for some reason then essentially there will be a down time

- Scalability - the "app load balancer" layer is not scalable and even if we scale we are back to square one of dynamic infrastructure unable to be identified by the web tier

- Management - another layer that needs to be managed and monitored

AWS Internal Elastic Load Balancer (i am naming it iELB :) ) is the solution for this requirement. We can place iELB between the web and application tier and bring in scalability for the application tier and leave the rest of architecture concerns to AWS. iELB is available only within VPC and hence is useful for infrastructure that is residing within VPC.

Apart from using iELB for scaling and load balancing the application tier, I found it useful for another use case - Scaling Database

Most of the web applications are read intensive in nature. The resultant database queries will primarily be read transactions. To achieve better database throughput we can separate out the write and read transactions to different database Instances. If you are using Amazon RDS, you can launch RDS read replicas and use them to serve the read transactions. To scale the read operations we can launch multiple copies of read replicas according to the load condition. But there aren't good JDBC drivers that can load balance between multiple read replica Instances. Even if we are to use the drivers' capability to load balance between multiple DB Instances, it would be difficult to add and remove Instances dynamically. We will have to push configuration changes to the application tier whenever we scale out or scale in the read replicas. This can be addressed by utilizing iELB as the load balancer between the application tier and database. The resultant architecture will look like below.

Note: As of today, one can add only EC2 Instances behind the iELB. Whenever we create a read replica we do not know the Instance Id of that read replica. Rather we know only the end point URL. Hence this architecture is currently not implementable as such.

Here are some of the highlights of this architecture:

Security

- VPC - the entire infrastructure is in VPC providing network isolation within AWS and ability to connect to your datacenter over VPN

- Public and Private Subnets - separate different layers between public and private subnets. Public subnet Instances will be exposed to the internet and private subnet Instances will be accessible only within VPC

- Security Groups - create Security Groups for public Elastic Load Balancer and Internal Load Balancer. Configure the web servers to be accessible only from public ELB. Configure the iELB to accept requests only from Web Security Group. Configure RDS Security Group to accept requests only from the iELB

Scalability

- Web/App Server - web servers in the public subnet can be scaled automatically and Elastic Load Balancing will load balance and failover between the available web EC2 Instances

- Database - launch additional read replicas as and when required to scale read requests. Scaling action will remain transparent to the application tier above

High Availability

- Multiple Subnets - utilize multiple subnets for all the layers in the architecture. Each subnet will be created on a Availability Zone (AZ). Hence using multiple subnets can mitigate AZ level failure (which is a rare case)

- RDS Multi AZ - launch RDS in Multi-AZ mode and use automatic failover from the primary to standby in case of a primary failure

4 comments:

Hii Man ..... I had created multiple read replicas but each of them had different endpoints....now how/where does the actual load balancing happens from the app read requests....Do we need to mention each read replicas endpoint at the coding level or is there any load balancer setup for RDS in AWS ...

Ur comments on this is greatly appreciated ........

Thanks in advance

Unni

If you are setting up this within VPC, as I had mentioned, you can create an internal Elastic Load Balancer -> when you create ELB, choose VPC and launch it. Then you can place the read replicas behind the ELB and from your application you will just point to ELB end point.ELB will do a round robin between read replicas. If not, you can place a HAProxy load balancer on an EC2 Instance and add the read replicas to it. From your application you can connect to the HAProxy LB which will do a round robin to all read replicas. Basically you need to have a LB in between

Hi Raghu,

I'm very keen on an update to your experience using iELB for mysql read replicas.

Have you been using this as a solution in production? Any gotchas or issues you've discovered?

When I put the particular question to multiple Solution architects at AWS they said it wasnt a recommended use of iELB.

Therefore this is not a solution i'm looking at for mysql, and am implementing active/passive HAProxy in VPC.

Hi Andrew,

As I had highlighted in the post, currently you can only add EC2 Instances behind the iELB. RDS Read Replicas do not come with any Instance Id's and you will not be able to add them behind iELB in the first place.

Secondly, ELB doesn't do a mysql health check unlike HAProxy. ELB can primarily do a TCP or HTTP health check. But if it does so with mysql, mysql will block any incoming request because of too many connections from a single source. That's why HAProxy has a "mysql check" that we need to configure whenever we use HAProxy to load balance mysql. So that is another limitation with ELB.

Primarily iELB was introduced by AWS to load balance the application tier in a 3-tier architecture. The post was on my thought if we could extend that to load balance read replicas and mysql slaves.

So, if you are looking at load balancing multiple mysql Instances in VPC, go ahead and use HAProxy for that.

Post a Comment