During last week, AWS had an outage in one of their data centers. Unlike last year, this year's outage had lesser impact on only one Availability Zone in the US-East region of AWS. None of the customers for whom we had setup their infrastructure in AWS suffered any downtime during this outage. One of the customer panicked and reported Instances becoming unreachable only to find that the Instances in other AZs were running fine and the website continued to be available. What was more interesting was, the next day, one of India's leading entertainment content provider got in touch with us and wanted us to evaluate their existing AWS infrastructure. While I was talking to the customer, I casually asked the prime reason for this evaluation. He mentioned that their website is completely hosted in AWS and the website went down during the outage. And he was not aware that the website was down until some one from their team accidentally found the website to be down.

Here's the update from AWS on what went wrong. The full information is available on AWS Service Health Dashboard page but might become difficult to access by next week as the date widget moves ahead

"At approximately 8:44PM PDT, there was a cable fault in the high voltage Utility power distribution system. Two Utility substations that feed the impacted Availability Zone went offline, causing the entire Availability Zone to fail over to generator power. All EC2 instances and EBS volumes successfully transferred to back-up generator power. At 8:53PM PDT, one of the generators overheated and powered off because of a defective cooling fan. At this point, the EC2 instances and EBS volumes supported by this generator failed over to their secondary back-up power (which is provided by a completely separate power distribution circuit complete with additional generator capacity). Unfortunately, one of the breakers on this particular back-up power distribution circuit was incorrectly configured to open at too low a power threshold and opened when the load transferred to this circuit. After this circuit breaker opened at 8:57PM PDT, the affected instances and volumes were left without primary, back-up, or secondary back-up power. Those customers with affected instances or volumes that were running in multi-Availability Zone configurations avoided meaningful disruption to their applications; however, those affected who were only running in this Availability Zone, had to wait until the power was restored to be fully functional."

Utilize Multiple Availability Zones

Or AZ's in short. AWS provides multiple Availability Zones in each region. Availability Zones are distinct locations that are engineered to be insulated from failures in other Availability Zones and provide inexpensive, low latency network connectivity to other Availability Zones in the same Region. Additionally in this FAQ answer, AWS clearly states that common points of failures like generators, cooling systems are not shared between AZs and each AZ is physically separate and independent. This recent outage was primarily because of a fault in the power distribution system and since AZs are isolated only one of the AZ (us-east-1c) in the US-East region got affected. Remaining AZ's were running smoothly without any interruption. Hence it is prudent to have the infrastructure distributed across multiple AZ's. Distribute your EC2 Instances across multiple AZ's and bring up your HA quotient.

Note: You need not worry about any performance issues because of Instances deployed across different AZs. All AZs have very low network latency between them and can be ignored for web applications.

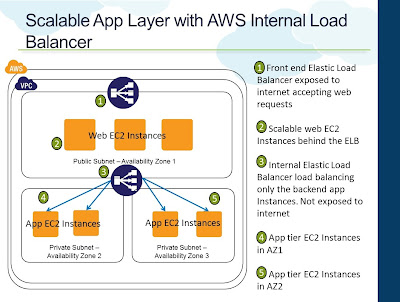

Configure ELB to use Multi AZ

AWS Elastic Load Balancer (ELB) can be configured to work across multiple AZ's. This way you can launch EC2 Instances in multiple AZs and have them tied to a single ELB. In event of a failure of one AZ, you will still have Instances running out of the other AZs and ELB will failover to them and your website will continue to be operational. When you create an ELB through the AWS Management Console you will not have the option to specify the AZ distribution. However, when you add Instances running in multiple AZ's to the ELB, the ELB automatically gets configured to work with multi AZs.

If you are using the command line interface or the API's, while creating the ELB itself you can mention the AZ's that the ELB needs to handle:

In this case, the moment the Instances in one AZ went down,

Utilize the Multi-AZ feature of RDS where RDS will run a standby database Instance in another AZ in addition to the primary database Instance. In the event of a primary failure, RDS will internally initiate a failover to the standby and the database will continue to serve the traffic. AWS has mentioned in the RDS status dashboard that during the outage none of their Multi-AZ deployments were affected (failover got triggered). Only the Single AZ RDS had to be recovered by AWS team.

"As described in the EC2 post, a number of EBS volumes in the affected Availability Zone were impacted, disabling many Single Availability Zone (Single-AZ) RDS instances. Multi-AZ RDS instances were able to immediately begin failing over to other healthy Availability Zones. We started the recovery process for the affected Single-AZ RDS instances after EBS APIs became available at approximately 10:40 PM PST. Upon completing storage volume checks, we began processing EBS recovery volumes and recovered most of the affected RDS instances by 5:00 AM PDT on June 15th."

There are couple of things to note here:

Here's the update from AWS on what went wrong. The full information is available on AWS Service Health Dashboard page but might become difficult to access by next week as the date widget moves ahead

"At approximately 8:44PM PDT, there was a cable fault in the high voltage Utility power distribution system. Two Utility substations that feed the impacted Availability Zone went offline, causing the entire Availability Zone to fail over to generator power. All EC2 instances and EBS volumes successfully transferred to back-up generator power. At 8:53PM PDT, one of the generators overheated and powered off because of a defective cooling fan. At this point, the EC2 instances and EBS volumes supported by this generator failed over to their secondary back-up power (which is provided by a completely separate power distribution circuit complete with additional generator capacity). Unfortunately, one of the breakers on this particular back-up power distribution circuit was incorrectly configured to open at too low a power threshold and opened when the load transferred to this circuit. After this circuit breaker opened at 8:57PM PDT, the affected instances and volumes were left without primary, back-up, or secondary back-up power. Those customers with affected instances or volumes that were running in multi-Availability Zone configurations avoided meaningful disruption to their applications; however, those affected who were only running in this Availability Zone, had to wait until the power was restored to be fully functional."

Utilize Multiple Availability Zones

Or AZ's in short. AWS provides multiple Availability Zones in each region. Availability Zones are distinct locations that are engineered to be insulated from failures in other Availability Zones and provide inexpensive, low latency network connectivity to other Availability Zones in the same Region. Additionally in this FAQ answer, AWS clearly states that common points of failures like generators, cooling systems are not shared between AZs and each AZ is physically separate and independent. This recent outage was primarily because of a fault in the power distribution system and since AZs are isolated only one of the AZ (us-east-1c) in the US-East region got affected. Remaining AZ's were running smoothly without any interruption. Hence it is prudent to have the infrastructure distributed across multiple AZ's. Distribute your EC2 Instances across multiple AZ's and bring up your HA quotient.

Note: You need not worry about any performance issues because of Instances deployed across different AZs. All AZs have very low network latency between them and can be ignored for web applications.

Configure ELB to use Multi AZ

AWS Elastic Load Balancer (ELB) can be configured to work across multiple AZ's. This way you can launch EC2 Instances in multiple AZs and have them tied to a single ELB. In event of a failure of one AZ, you will still have Instances running out of the other AZs and ELB will failover to them and your website will continue to be operational. When you create an ELB through the AWS Management Console you will not have the option to specify the AZ distribution. However, when you add Instances running in multiple AZ's to the ELB, the ELB automatically gets configured to work with multi AZs.

If you are using the command line interface or the API's, while creating the ELB itself you can mention the AZ's that the ELB needs to handle:

elb-create-lb LoadBalancerName --availability-zones value[,value...] --listener "protocol=value, lb-port=value, instance-port=value, [cert-id=value]" [ --listener "protocol=value, lb-port=value, instance-port=value, [cert-id=value]" ...] [General Options]Additionally you create an AutoScalingGroup and mention the same list of Availability Zones, you are automating the whole failover process.

as-create-auto-scaling-group AutoScalingGroupName --availability-zones value[,value...] --launch-configuration value --max-size value --min-size value [--cooldown value ] [--load-balancers value[,value...] ] [General Options]

In this case, the moment the Instances in one AZ went down,

- AutoScaling would have tried to launch new Instances to replace the failed ones

- AWS API's would have thrown errors due to AZ unavailability

- AutoScaling would have launched the new Instances in another AZ

Utilize the Multi-AZ feature of RDS where RDS will run a standby database Instance in another AZ in addition to the primary database Instance. In the event of a primary failure, RDS will internally initiate a failover to the standby and the database will continue to serve the traffic. AWS has mentioned in the RDS status dashboard that during the outage none of their Multi-AZ deployments were affected (failover got triggered). Only the Single AZ RDS had to be recovered by AWS team.

"As described in the EC2 post, a number of EBS volumes in the affected Availability Zone were impacted, disabling many Single Availability Zone (Single-AZ) RDS instances. Multi-AZ RDS instances were able to immediately begin failing over to other healthy Availability Zones. We started the recovery process for the affected Single-AZ RDS instances after EBS APIs became available at approximately 10:40 PM PST. Upon completing storage volume checks, we began processing EBS recovery volumes and recovered most of the affected RDS instances by 5:00 AM PDT on June 15th."

There are couple of things to note here:

- Unlike ELB, RDS failover is not instant. In simple words, the front end application that connects to the RDS will see a down time when the failover happens. During the time RDS completely fails over to the standby, connectivity to the RDS endpoint will not be available. We have seen that the time taken is approximately 5-7 minutes for around 100GB of data. But with more data probably it might take little longer.

- When it comes to database the impact is different when compare to, say, web servers. With database one will worry more about the data consistency and data durability than just availability. So when AWS talks above about recovering the Single RDS, it is actually the recovery of the EBS behind the RDS. These Single RDS EBS became unavailable and since there was a power failure it potentially resulted in a write corruption. Hence customers were asked to restore to the nearest point in time snapshot. With Multi-AZ such data corruption issue will not arise since there will be an entire replica on another AZ and data is written on both concurrently on a commit.

- Of course, Multi-AZ comes with additional cost. Probably double. The cost of running a Single RDS large Instance in US-East is about $300/mo give or take. In case of a Multi-AZ setup, this cost will double and AWS would be charging around $600/mo. Availability, consistency and data durability versus cost is something individual application owners need to decide

Stateless Servers

Apart from the persistent store (database) of the application, it is recommended to make all other layers in the infrastructure stateless. Web and application servers specifically needs to be designed to be stateless. Most of the applications store the web session data on the web server memory itself. Similarly there are other assets like user uploaded files, server and application log files that typically reside on the web/app servers. All of these assets needs to move to a central repository. For example, session information can be stored on caching servers or databases and files can be periodically rotated to, say, Amazon S3. Once we design such an application, then it is easy to make modifications in the infrastructure to maintain HA and also to scale on demand. Spreading the infrastructure across multiple AZ's can definitely help you achieve HA. But the failover will not be transparent to the user if the session information is stored in memory. If 100s of users session state is maintained in Instances in AZ1 and that AZ becomes unavailable, the front end Load Balancer will immediately failover to the Instances running in AZ2. But users' session state will be unavailable in these AZ2 Instances and will probably be asked to login again.

With these simple principles coupled with utilizing AWS building blocks effectively one can definitely build an infrastructure that will be truely Highly Available. Of course, these are lessons that one learn as Cloud delivery model matures and as architects one has to keep fine tuning the architecture post live as well.

Note: All the above thoughts are to increase uptime during problems with a single AZ. Last year we saw a different problem in AWS where a configuration change coupled with the architecture of EBS an entire region (US-East) had a service disruption. Infrastructure architecture can be designed to withstand such huge disruption as well. However those come with very high costs of replicating data over the internet. And in most of the cases the cost of operating such an infrastructure outweighs the benefits.